| Prev |  | Next |

Would the tests described so far also run with content that is not ISO-8859-1, for example with Russian, Greek or Chinese text?

A difficult question. A lot of internal tests are done with Greek, Russian and Chinese documents, but tests are missing for Hebrew, Arabic and Japanese documents. All in all it is not 100% clear that every available test will work with every language, but it should.

The following hints may solve problems not only when working with UTF-8 files under PDFUnit. They may also be helpful in other situations.

Metadata and keywords can contain Unicode characters.

If your operating system does not support fonts for foreign languages,

you can use Unicode escape sequences in the format \uXXXX

within strings. For example the copyright character

“©” has the Unicode sequence \u00A9:

@Test public void hasProducer_CopyrightAsUnicode() throws Exception { String filename = "documentUnderTest.pdf"; AssertThat.document(filename) .hasProducer() .equalsTo("txt2pdf v7.3 \u00A9 SANFACE Software 2004") // 'copyright' ; }

It would be too difficult to figure out the hex code for all characters of a longer text.

Therefore PDFUnit provides the small utility ConvertUnicodeToHex.

Pass the foreign text as a string to the tool, run the program and place the

generated hex code into your test. Detailed information can be found in chapter

9.2: “Convert Unicode Text into Hex Code”.

A test with a longer sequence may look like this:

@Test public void hasSubject_Greek() throws Exception { String filename = "documentUnderTest.pdf"; String expectedSubject = "Εργαστήριο Μηχανικής ΙΙ ΤΕΙ ΠΕΙΡΑΙΑ / Μηχανολόγοι"; //String expectedSubject = "\u0395\u03C1\u03B3\u03B1\u03C3\u03C4\u03AE" // + "\u03C1\u03B9\u03BF \u039C\u03B7\u03C7\u03B1" // + "\u03BD\u03B9\u03BA\u03AE\u03C2 \u0399\u0399 " // + "\u03A4\u0395\u0399 \u03A0\u0395\u0399\u03A1" // + "\u0391\u0399\u0391 / \u039C\u03B7\u03C7\u03B1" // + "\u03BD\u03BF\u03BB\u03CC\u03B3\u03BF\u03B9"; AssertThat.document(filename) .hasSubject() .equalsTo(expectedSubject) ; }

Chapter

13.11: “Using XPath”

describes how to use XPath in PDFUnit tests. You can also use Unicode

sequences in XPath expressions. The following test checks that there is no

XML node below the node rsm:HeaderExchangedDocument which contains

the Unicode string \u20AC:

@Test public void hasZugferdData_ContainingEuroSign() throws Exception { String filename = "ZUGFeRD_1p0_COMFORT_Kraftfahrversicherung_Bruttopreise.pdf"; String euroSign = "\u20AC"; String noTextInHeader = "count(//rsm:HeaderExchangedDocument//text()[contains(., '%s')]) = 0"; String noEuroSignInHeader = String.format(noTextInHeader, euroSign); XPathExpression exprNumberOfTradeItems = new XPathExpression(noEuroSignInHeader); AssertThat.document(filename) .hasZugferdData() .matchingXPath(exprNumberOfTradeItems) ; }

Pay special attention to data read from the file system.

Its byte representation depends on the encoding of file system.

Every Java program that processes files depends

on the environment variable file.encoding.

There are multiple possibilities to set the environment for the current shell:

set _JAVA_OPTIONS=-Dfile.encoding=UTF8 set _JAVA_OPTIONS=-Dfile.encoding=UTF-8 java -Dfile.encoding=UTF8 java -Dfile.encoding=UTF-8

During the development of PDFUnit there were two tests which ran successfully under Eclipse, but failed with ANT due to the current encoding.

The following command did not solve the encoding problem:

// does not work for ANT: ant -Dfile.encoding=UTF-8

Instead, the property had to be set using JAVA_TOOLS_OPTIONS:

// Used when developing PDFUnit: set JAVA_TOOL_OPTIONS=-Dfile.encoding=UTF-8

You can configure “UTF-8” in many places in the 'pom.xml'. The following code snippets show some examples. Choose the right one for your problem:

<properties> <project.build.sourceEncoding>UTF-8</project.build.sourceEncoding> <project.reporting.outputEncoding>UTF-8</project.reporting.outputEncoding> </properties>

<plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-compiler-plugin</artifactId> <version>2.5.1</version> <configuration> <encoding>UTF-8</encoding> </configuration> </plugin>

<plugin> <groupId>org.apache.maven.plugins</groupId> <artifactId>maven-resources-plugin</artifactId> <version>2.6</version> <configuration> <encoding>UTF-8</encoding> </configuration> </plugin>

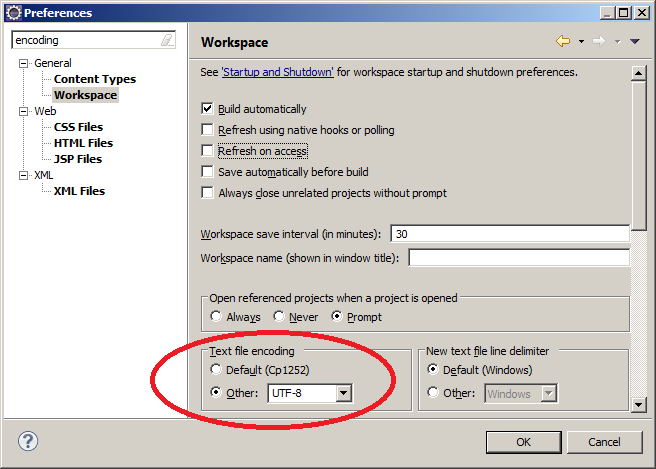

When you are working with XML files in Eclipse, you do not need to configure Eclipse for UTF-8, because UTF-8 is the default for XML files. But the default encoding for other file types is the encoding of the file system. So, it is recommended to set the encoding for the entire workspace to UTF-8:

This default can be changed for each file.

If tests of Unicode content fail, the error message may be presented incorrectly in Eclipse or in a browser. Again the file encoding is responsible for this behaviour. Configuring ANT to “UTF-8” should solve all your problems. Only characters from the encoding “UTF-16” may corrupt the presentation of the error message.

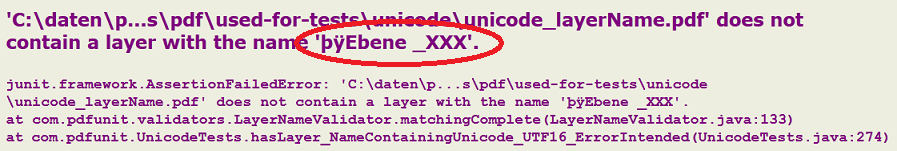

The PDF document in the next example includes a layer name containing UTF-16BE characters. To show the impact of Unicode characters in error messages, the expected layer name in the test is intentionally incorrect to produce an error message:

/** * The name of the layers consists of UTF-16BE and contains the * byte order mark (BOM). The error message is not complete. * It was corrupted by the internal Null-bytes. */ @Test public void hasLayer_NameContainingUnicode_UTF16_ErrorIntended() throws Exception { String filename = "documentUnderTest.pdf"; // String layername = "Ebene 1(4)"; // This is shown by Adobe Reader®, // "Ebene _XXX"; // and this is the used string String wrongNameWithUTF16BE = "\u00fe\u00ff\u0000E\u0000b\u0000e\u0000n\u0000e\u0000 \u0000_XXX"; AssertThat.document(filename) .hasLayer() .equalsTo(wrongNameWithUTF16BE); ; }

When the tests are executed with ANT, a browser shows the complete error

message including the trailing string þÿEbene _XXX:

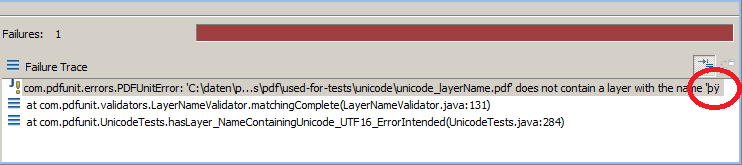

But the JUnit-View in Eclipse cuts the error message after the internal Byte-Order-Mark.

The message '...\unicode_layerName.pdf' does not contain a layer with the name 'þÿ'

should end with Ebene_XXX:

A problem can occur due to a “non-breaking space”.

Because at first it looks like a normal space, the comparison with

a space fails. But when using the Unicode sequence of the “non-breaking space”

(\u00A0) the test runs successfully. Here's the test:

@Test public void nodeValueWithUnicodeValue() throws Exception { String filename = "documentUnderTest.pdf"; DefaultNamespace defaultNS = new DefaultNamespace("http://www.w3.org/1999/xhtml"); String nodeValue = "The code ... the button's"; String nodeValueWithNBSP = nodeValue + "\u00A0"; // The content terminates with a NBSP. XMLNode nodeP7 = new XMLNode("default:p[7]", nodeValueWithNBSP, defaultNS); AssertThat.document(filename) .hasXFAData() .withNode(nodeP7) ; }